The department continues to propel innovation of the foundational and applied technologies that enable productive data-driven discovery.

Professor Eli Upfal, who serves as deputy director of Brown’s Data Science Initiative, works to make data exploration tools more useful and rigorous. As more people use analytics software to explore large datasets, the chances increase that random fluctuations in data will be mistaken for significant patterns. Such “false discoveries” could have dire consequences — particularly in areas such as healthcare or law enforcement. Upfal and colleagues have developed data exploration systems with advanced statistical safeguards that help users avoid false discoveries. The research served as the foundation for a commercially available data exploration product and a new startup company called Einblick.

In other data science research, Assistant Professor Malte Schwarzkopf and colleagues recently unveiled a new data science framework that dramatically speeds up data exploration. Most data scientists use a computer language called Python for many data analysis tasks. However, industry-standard data science frameworks have trouble processing Python code, which leads to a “performance tax” when using this user-friendly language. The new framework developed by Schwarzkopf, called Tuplex, eliminates that performance tax, enabling the execution of Python queries up to 90 times faster than current systems. That speed increase could vastly improve productivity for data scientists.

Breakthroughs in Artificial Intelligence

Brown researchers are at the leading edge of a field that’s driving many of the recent advances in data and computational science over the past decade: artificial intelligence (AI). Ellie Pavlick, an assistant professor, is working with fellow faculty members Stefanie Tellex, Carsten Eikhoff and others on a groundbreaking approach to language processing. Current AI systems learn language by poring over vast amounts of text. That works well for learning to recognize words, but less well when it comes to actually understanding meaning and context.

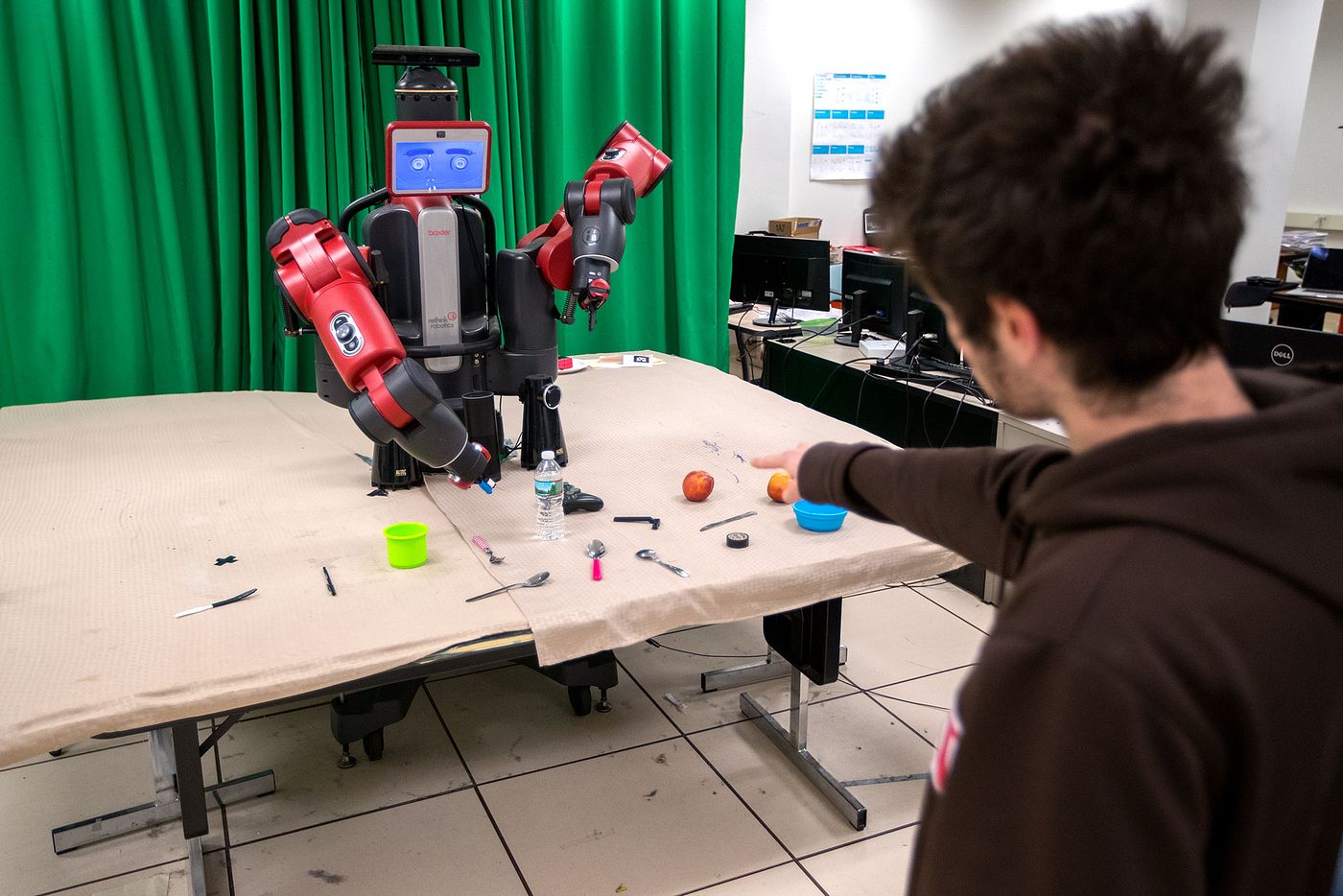

Pavlick and her colleagues are working on ways of teaching computers to read that is similar to how children are taught: by letting the computer learn language by connecting words with objects and actions it observes in the real world. The project has earned the largest single funding award in the department’s history — a contract of over $6 million from the U.S. Defense Advanced Research Projects Agency (DARPA).

Also working in AI, Professor Michael Littman recently chaired an international panel of researchers tasked with producing a report on the state of the artificial intelligence field. The report is the second from an organization based at Stanford AI100, which aims to track AI development at regular intervals over the next century.

In this newest edition of the report, released in September 2021, Littman and his colleagues found that advances in computer vision, language processing and other areas mean that more people are interacting with AI on a daily basis than ever before — from getting movie recommendations to receiving medical diagnoses. With that success, however, comes a renewed urgency to understand and mitigate the risks and downsides of AI-driven systems, such as algorithmic discrimination or use of AI for deliberate deception. Computer scientists must work with experts in the social sciences and law to assure that the pitfalls of AI are minimized, the panel concluded.